AI Guardrails: Your Questions Answered

Everything you need to know about keeping Generative AI safe, accurate, and compliant with Altrum AI Guardrails.

What is Altrum AI Guardrails?

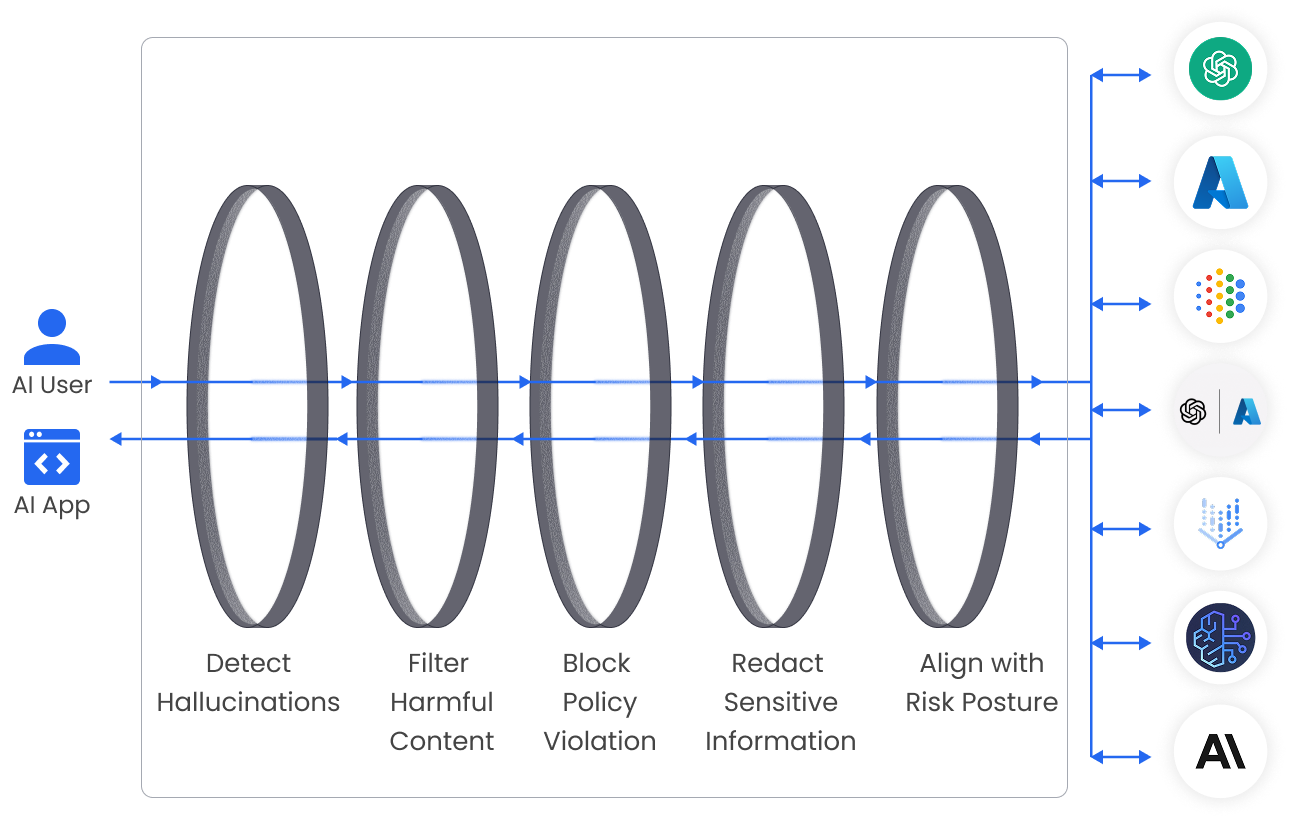

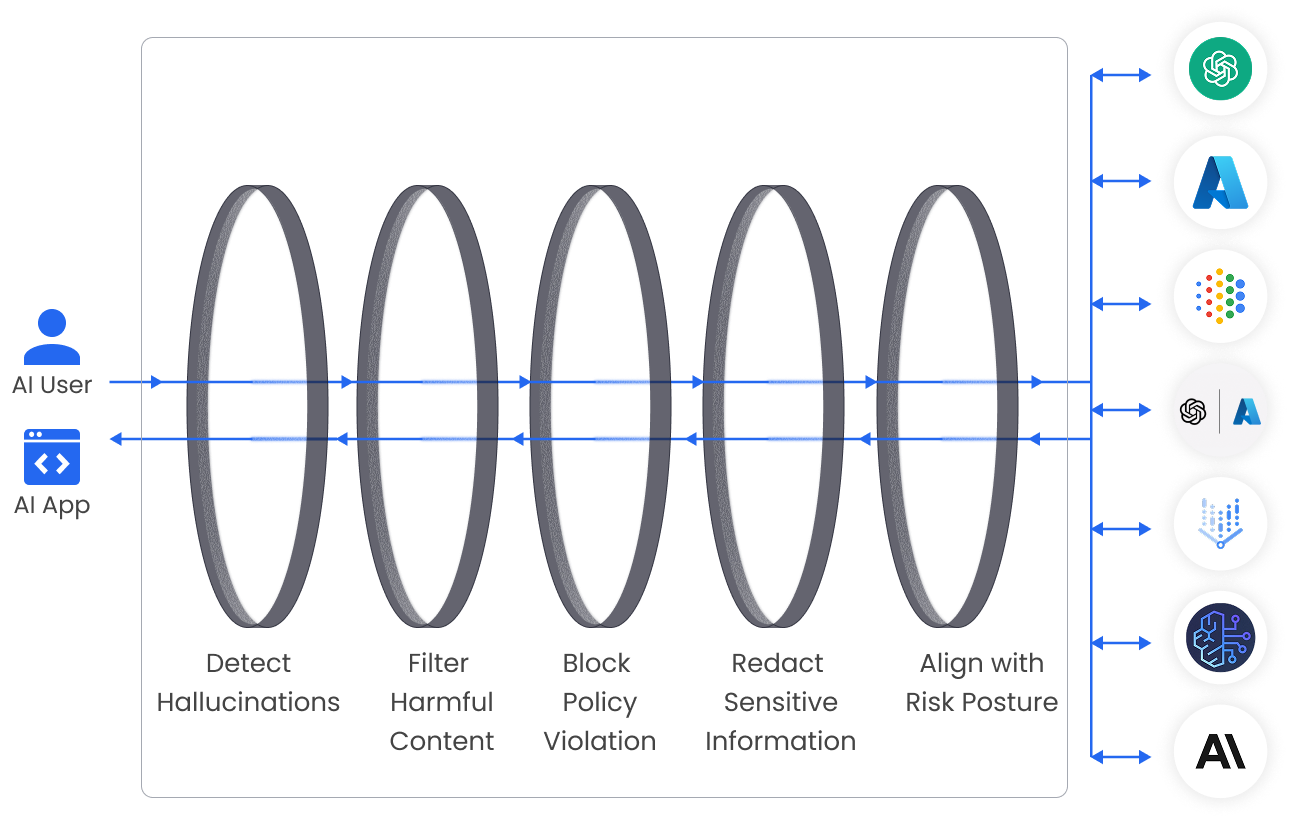

Altrum AI Guardrails is a policy-driven safeguard layer that protects Generative AI applications in real time. It detects hallucinations, blocks harmful content, redacts sensitive data, and enforces your responsible AI policies across any model or provider.

Which AI models and platforms are supported?

Altrum AI Guardrails works consistently across major AI roviders, including OpenAI, Anthropic, Amazon Bedrock, Google AI, and Azure OpenAI, as well as fine-tuned and self-hosted models. It integrates seamlessly via one OpenAI-compatible endpoint.

How does Altrum AI Guardrails help with compliance?

Guardrails enforces safety, privacy, and data protection measures aligned with regulations such as the EU AI Act, GDPR, and HIPAA. Every prompt and response is logged for transparency, helping simplify compliance reviews and audits.

Can Guardrails prevent prompt injection and jailbreaks?

Yes. Altrum AI Guardrails includes a dedicated Prompt Injection Protection feature that detects and blocks malicious attempts to override system instructions, inject harmful content, or bypass security controls.

How quickly can we deploy Altrum AI Guardrails?

Most organisations can integrate Altrum AI Guardrails within days. It requires minimal engineering effort and scales from pilot projects to full enterprise adoption without slowing down innovation.